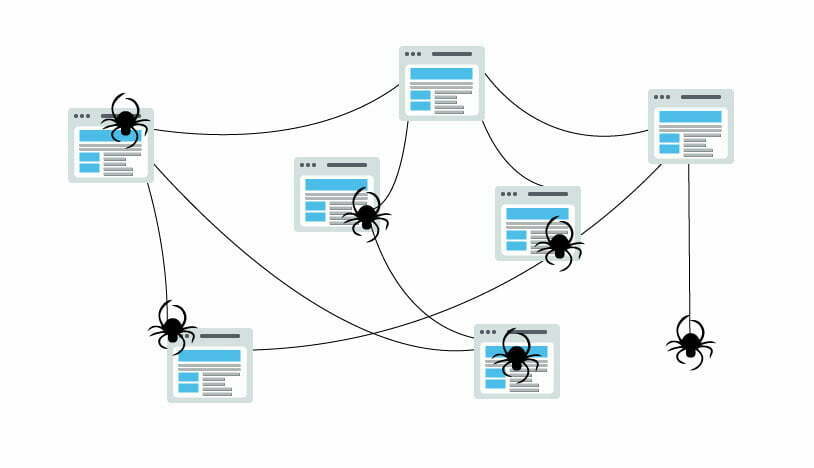

Every single time you type a keyword in search engine box, there are a thousands of webpages with good information for you. how a search engine figures out which results is the best for you? and which pages match you need? In order to serve you a good result there are a tree primary functions or a processes that helps the search engine to find out the best results for you: – Crawling: a software programs called bot, crawl or spider start by fetching a few web pages then they follow the links in those pages and discovers the content of URLs they found. – Indexing: those URLs discovered during the crawling processes stored and organized in the page index of the web. Every page indexed it’s ready to be displayed as result to a relevant query. – Ranking: Provide the best answer for the searcher, which means that the results are sorted by the most relevant to the least relevant.

Contents

CRAWLING

Crawling is the search or the discovery process for a new and updated content (pages, videos, images …) doing by robots (known as crawlers or spiders) following the links on the few first pages they fetch to other pages, and following those links also, and add them to the index called Caffeine (a massive data base of discovered URLs) to later be retrieved when the content on that URL is most relevant for the information the searcher is asking for.

WHAT IS A SEARCH ENGINE INDEX ?

During the crawling process the search engine store the information they found in an index, a huge database of all URL they found and it’s seem good enough to serve for the searchers.

SEARCH ENGINE RANKING ?

Ranking is ordering of search results by relevance, it means the higher a website is ranked the more relevant the search engine believes that the site is to the searcher’s query.

When someone performs a search there are a hundreds and thousands of possible results, how does the search engine rank them,

In this case the search engine asks questions (how many time this page contain this keyword? is this keyword appear in the title, the URL or the tags? Does this page contain a synonym for this keyword, how many? does this page from a quality website or a bad website “scammers” etc…), after those questions the search engine generate the SERP () with the most relevant results to searcher’s query.

WHAT IS A ROBOTS.TXT FILES ?

In some cases, you want the search engine crawlers blocked from some part in your site, or give instructions to the crawlers to avoid storing certain pages in their index, these instructions are given by file named “Robots.txt” and located in the root directory of the site (ex. Domain.com/robots.txt.

This file treated by the crawlers in certain way

- If the crawler can’t find a robots.txt file for the site, it proceeds to crawl the site

- If the crawler finds a robots.txt file for a site, it by the instruction and the suggestions and crawl the site

- If the crawler gets blocked by an error while trying to access a site’s robots.txt file and can’t determine if one exists or not, it won’t crawl the site.

Block the search engine bot from your sensitive pages is good for your security, but not all web robots follow robots.txt, Person with bad attentions build bots that don’t follow this protocol, in fact, some of them use robots.txt files to know where you’ve located private content,

Although it might seem logical to block crawlers from private pages, It’s better to No Index your private content and gate them behind a login wall better than place them in your robots.txt file.

To read more details about this topic: moz

More Resources:

▷ How SSL Certifications Can Affect SEO And Google Rankings

▷ 20+ Digital Marketing Tools Top Marketers Recommend

▷ 12 Proven Steps to Boost Your Organic CTR in Google

▷ 13 Ways to Improve Your Organic Click-Through Rate

▷ Click-Through Rates in the SERPs – What Are the Real Numbers?